Your Legal Resource

Bayesian Network Models In Ecology

Metrics for evaluating performance and uncertainty of Bayesian network models

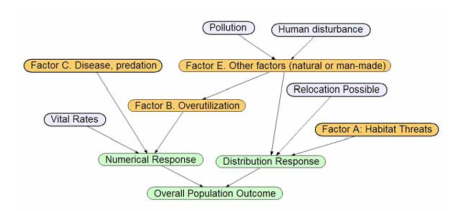

This paper presents a chosen set of existing and new measurements for checking Bayesian organization model execution and vulnerability. Chosen existing and new measurements are talked about for leading model affectability examination (fluctuation decrease, entropy decrease, case record recreation); assessing situations (impact investigation); portraying model intricacy (quantities of model factors, joins, hub states, restrictive probabilities, and hub inner circles); surveying forecast execution (disarray tables, covariate-and contingent likelihood weighted disarray blunder rates, a region under collector working trademark bends, k-overlap cross-approval, round result, Schwarz' Bayesian data basis, genuine ability measurement, Cohen's kappa); and assessing vulnerability of model back likelihood dispersions (Bayesian solid span, back likelihood sureness list, assurance envelope, Gini coefficient). Models are introduced of applying the measurements to 3 certifiable models of natural life populace investigation and the executives. Utilizing such measurements can fundamentally reinforce model believability, acknowledgment, and fitting application, especially when advising the board choices.

Highlights

Metrics and methods to evaluate performance and uncertainty of probability networks. Used for analyzing sensitivity, scenarios, complexity, accuracy, and model uncertainty. Case examples illustrate use with Bayesian network models. Use enhances model credibility, acceptance, and appropriate application.

- Forecasting the Range-wide Status of Polar Bears at Selected Times in the 21st Century

Bruce G. Marcot (website here)

USDA Forest Service

Verified email at usda.gov